Last updated on

In the past week, I’ve noticed numerous objections to thoroughly examining the 2,596 pages.

However, the primary inquiry we should focus on is, “How can we maximize our testing and learning from these documents?”

SEO operates as an applied science, wherein theory serves as a foundation for experiments rather than the ultimate objective.

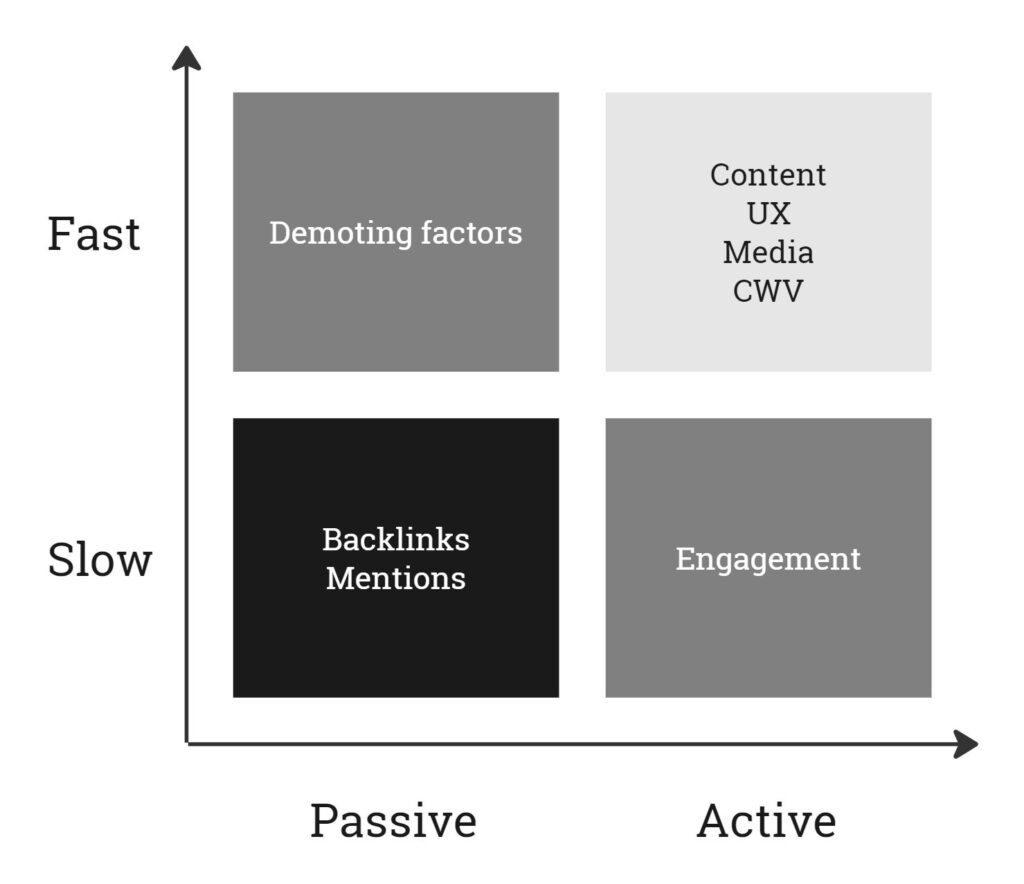

It’s hard to find a more fertile ground for generating test ideas. However, not all factors can be tested equally. They vary in types (such as number/integer: range, Boolean: yes/no, string: word/list) and reaction times (indicating how swiftly they influence organic rank changes).

Consequently, we can conduct A/B tests on fast and active factors, whereas we need to resort to before/after tests for slow and passive ones.

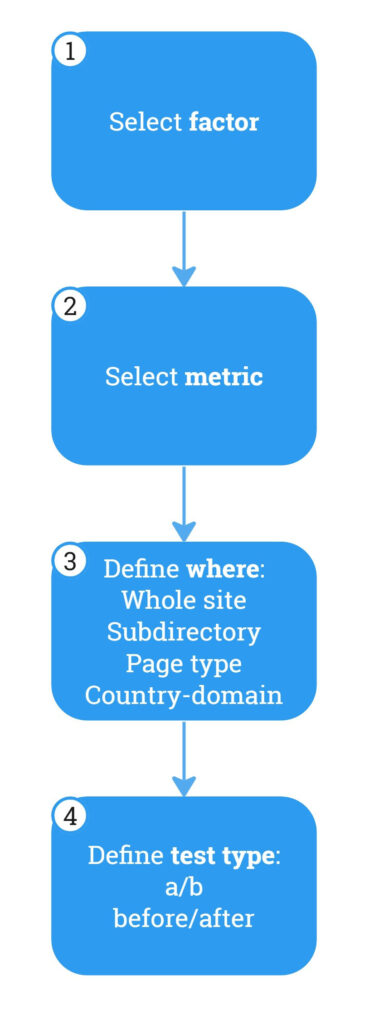

To systematically test ranking factors:

While many ranking factors in the data leak are represented as integers, indicating a spectrum, certain Boolean factors lend themselves to straightforward testing:

Factors within your direct control include:

Announcing NEW AI-Driven Capabilities and Enhanced UI in Moz Pro

Uncover insights swiftly and enhance decision-making with our latest AI-driven features, a redesigned user interface, and optimized SEO workflows.

ADVERTISEMENT

Factors to Deteriorate (Negative) Ranking:

Influential Factors with Limited Direct Control:

Before implementing changes, conduct a thorough evaluation of your performance in the specific area you intend to test, such as Core Web Vitals.

Based on the factors described in the leaked document and their potential impact on various metrics, here’s the alignment:

When testing, opt for a country-specific domain or a platform with minimal risk exposure if you’re uncertain. For multilingual sites, consider implementing changes in one country initially and gauge performance against your primary market.

Isolating the test impact is crucial; focus on single-page alterations or specific subdirectories.

Concentrate tests on pages targeting particular keywords (e.g., “Best X”) or user intentions (e.g., “Read reviews”).

Keep in mind that some ranking factors apply across your entire site, such as site authority, while others are specific to individual pages, like click-through rates.

Ranking factors possess the potential to either complement or contradict each other as integral components of an equation.

Humans tend to struggle with grasping functions comprising numerous variables, suggesting that we likely underestimate the intricacies involved in achieving a high rank score, as well as the substantial impact a handful of variables can wield on the outcome.

Despite the intricate interplay among ranking factors, it’s essential not to shy away from experimentation.

Aggregators often find testing more straightforward than Integrators, benefiting from a wealth of comparable pages that yield more pronounced results. Conversely, Integrators, tasked with generating original content, encounter variations across each page, which can dilute test outcomes.

One of my preferred methodologies involves self-assessment of ranking factors to deepen one’s understanding of SEO, followed by methodically challenging and testing assumptions. Create a spreadsheet listing each ranking factor, assign a numerical value between zero and one based on perceived importance, and then multiply all factors together.

Testing merely provides an initial insight into the significance of ranking factors. However, it is through monitoring that we can observe and analyze relationships evolving over time, leading to more substantial conclusions.

The concept entails tracking metrics indicative of ranking factors, such as Click-Through Rate (CTR) reflecting title optimization, and plotting them over time to assess the effectiveness of optimization efforts. This approach is akin to standard monitoring practices, with the addition of novel metrics.

Monitoring systems can be constructed using various platforms, including:

The choice of tool is secondary to selecting the appropriate metrics and URL paths for analysis.

To gauge the impact of optimizations, consider measuring metrics either by page type or a defined set of URLs over time. Here are some key metrics to track, although I encourage you to question and refine these thresholds based on your personal experience:

User Engagement:

Backlink Quality:

Page Quality:

Site Quality:

It’s ironic that the leak occurred shortly after Google began displaying AI-generated results (AI Overviews), as we can now leverage AI to identify SEO gaps based on the leaked information.

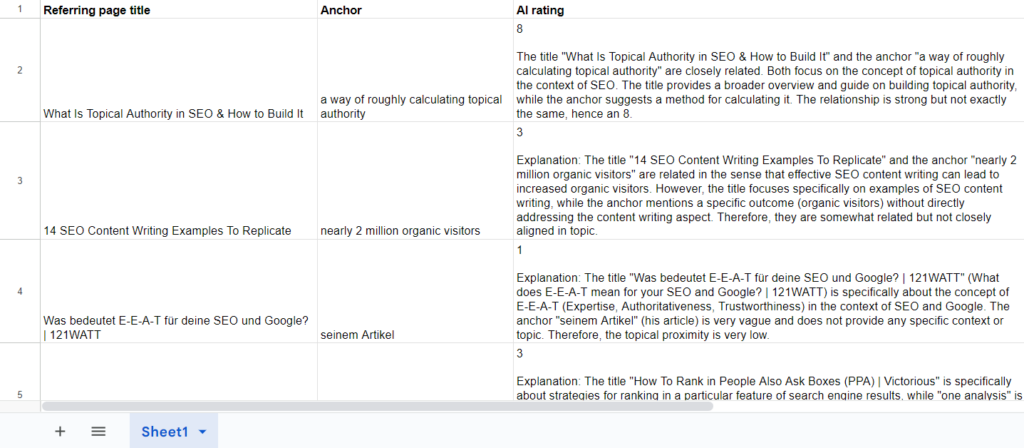

One method involves analyzing title matching between the source and target for backlinks. Utilizing common SEO tools, we can extract titles, anchor text, and surrounding content from both the referring and target pages.

Next, we can assess the topical proximity or token overlap using various AI tools, Google Sheets/Excel integrations, or local Language Models (LLMs). This can be facilitated by asking simple prompts such as, “Rate the topical proximity of the title (column B) compared to the anchor (column C) on a scale of 1 to 10, with 10 representing an exact match and 1 indicating no relationship.”

The recent revelation of Google’s ranking factors isn’t the first time a major platform’s algorithm has been laid bare:

Despite these leaks, there have been no reported instances of users or brands ethically hacking into these platforms.

The concept of platform engagement and its impact on gaming the system becomes intriguing, especially when considering the recent Google algorithm leak. Unlike platforms driven solely by user behavior, Google relies heavily on user intent signaled through searches.

Knowing the key elements shaping the algorithm is significant progress, even if the exact proportions remain elusive.

Google’s historic secrecy surrounding ranking factors is perplexing. While full disclosure might not be feasible, promoting a better web ecosystem—characterized by fast, user-friendly, visually appealing, and informative websites—could have been incentivized.

However, the ambiguity surrounding ranking criteria resulted in widespread speculation, fostering the proliferation of subpar content. This, in turn, prompted algorithm updates that inflicted financial losses on numerous businesses.

Original news from SearchEngineJournal