Last updated on

John Mueller from Google hinted at potential alterations to the signals for site-wide useful content, suggesting that new pages could gain ranking opportunities. However, there’s skepticism that even with such adjustments, it might not suffice to provide substantial assistance.

Upon its launch in 2022, Google’s Helpful Content Signals (HCU) operated as a site-wide signal. This implied that an entire website could be labeled as unhelpful and consequently lose its ranking ability, regardless of the helpfulness of individual pages.

Recently, the signals linked to the Helpful Content System were integrated into Google’s core ranking algorithm, transitioning them predominantly into page-level signals, albeit with a caveat.

According to Google’s documentation:

“Our core ranking systems are primarily designed to assess the helpfulness of individual pages, utilizing a range of signals and systems. However, we do incorporate some site-wide signals into our considerations as well.”

Two key points emerge:

Several publishers have expressed concerns via tweets regarding the impact of site-wide signals on the ranking potential of new helpful pages. John Mueller has provided some reassurance.

Should Google proceed with lightening the helpfulness signals to enable individual pages to rank, there’s doubt whether it will alleviate issues for many websites suffering from perceived site-wide helpfulness signals, as believed by publishers and SEOs.

A user on X (formerly Twitter) expressed frustration:

“It’s frustrating to see new content being penalized without getting a chance to accumulate positive user feedback. I publish something, and it immediately gets relegated to page 4, regardless of whether there are other articles on the same topic.”

Another user pointed out that if helpfulness signals are evaluated at the page level, theoretically, the more helpful pages should rank higher. However, that doesn’t seem to be the case in practice.

Google’s John Mueller replied to a question regarding sitewide helpfulness signals potentially impacting the rankings of new pages designed to be helpful. He hinted at a potential alteration in the application of these signals across the entire site.

Mueller’s tweet read:

“Yes, and I suppose for many heavily impacted sites, the ramifications will be felt across the entire site for now. It may require until the next update to observe similar significant effects, provided that the site’s new state is notably improved from before.”

Following his initial tweet, Mueller added that the search ranking team is actively developing a method to highlight high-quality pages within sites that may currently have strong negative sitewide signals, suggesting unhelpful content. This initiative aims to alleviate the burden on certain sites affected by these signals.

His tweet stated:

“I can’t guarantee anything, but the team addressing this issue is specifically examining ways to enhance Search results in the upcoming update. It would be fantastic to showcase more users the content that creators have diligently crafted, particularly on sites that prioritize helpfulness.”

Google’s Search Console notifies publishers of manual actions but doesn’t inform them when their sites experience ranking declines due to algorithmic factors like helpfulness signals. Publishers and SEOs lack visibility into whether their sites are affected by these signals. With the core ranking algorithm comprising numerous signals, it’s essential to remain open-minded about factors impacting search visibility post-update.

Here are five examples of changes that could influence rankings during a broad core update:

Various factors can influence rankings before, during, and after a core algorithm update. If rankings fail to improve, it may be necessary to acknowledge a knowledge gap hindering the resolution.

For instance, a publisher who recently experienced a decline in rankings connected the date of their rankings drop to the announcement of the site’s Reputation Abuse update. It’s a logical inference that if the rankings plummet coincides with an update, then it’s likely due to the update itself.

Here’s the tweet:

“Hey @searchliaison, feeling quite perplexed. Based on the timing, it seems we’ve been impacted by the Reputation Abuse algorithm. We’re not involved in coupon schemes, link selling, or any such practices.

Extremely baffled. We’ve maintained stability throughout and continue to revise/remove outdated content that doesn’t meet standards.”

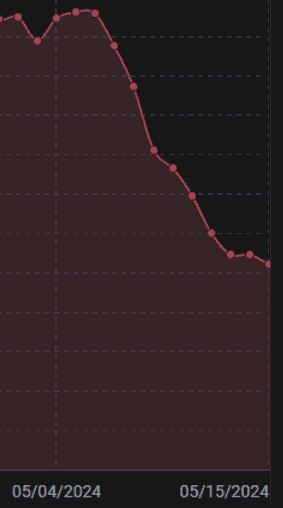

They attached a screenshot showing the decline in rankings.

SearchLiaison responded to the tweet by clarifying that Google is presently only implementing manual actions. While it’s reasonable to connect an update with a ranking issue, it’s essential to acknowledge the uncertainty regarding the exact cause of a rankings drop, particularly if other potential factors, like the five listed above, aren’t ruled out. It’s worth reiterating: pinpointing a specific signal as the sole reason for a rankings decline cannot be guaranteed.

In another tweet, SearchLiaison pointed out how some publishers mistakenly assumed they were subjected to an algorithmic spam action or were suffering from negative signals related to helpful content. SearchLiaison tweeted:

“I’ve reviewed numerous sites where concerns were raised about ranking losses, with owners suspecting algorithmic spam actions, only to find they weren’t affected.

…we have several systems in place to assess the helpfulness, usefulness, and reliability of individual content and sites (acknowledging they’re not flawless, as I’ve mentioned before, preempting anticipated inquiries about exceptions). Upon examining the same data available in Search Console as those concerned, it often doesn’t align with their assumptions.”

In the same tweet, SearchLiaison responded to someone who suggested that receiving a manual action might be fairer than facing an algorithmic action, highlighting the misconception behind such a notion due to the inherent knowledge gap.

He tweeted:

“…you don’t really want to think, ‘Oh, I just wish I had a manual action, that would be so much easier.’ You really don’t want your site to catch the attention of our spam analysts. Plus, manual actions aren’t instantly processed.”

The overarching point here (and with 25 years of hands-on SEO experience, I can confidently assert this) is to remain open-minded to the possibility that there might be other underlying factors at play which remain undetected. While false positives do occur, it’s not always the case that Google errs; sometimes, it’s a matter of a knowledge gap. This is why I suspect that even if Google facilitates easier ranking for new pages, many may not see an improvement in rankings. If such a scenario unfolds, it’s crucial to maintain an open mind and consider alternative explanations.

Original news from SearchEngineJournal